One-way ANOVA | When and How to Use It (With Examples)

ANOVA, which stands for Analysis of Variance, is a statistical test used to analyze the difference between the means of more than two groups.

A one-way ANOVA uses one independent variable, while a two-way ANOVA uses two independent variables.

When to use a one-way ANOVA

Use a one-way ANOVA when you have collected data about one categorical independent variable and one quantitative dependent variable. The independent variable should have at least three levels (i.e. at least three different groups or categories).

ANOVA tells you if the dependent variable changes according to the level of the independent variable. For example:

- Your independent variable is social media use, and you assign groups to low, medium, and high levels of social media use to find out if there is a difference in hours of sleep per night.

- Your independent variable is brand of soda, and you collect data on Coke, Pepsi, Sprite, and Fanta to find out if there is a difference in the price per 100ml.

- You independent variable is type of fertilizer, and you treat crop fields with mixtures 1, 2 and 3 to find out if there is a difference in crop yield.

The null hypothesis (H0) of ANOVA is that there is no difference among group means. The alternative hypothesis (Ha) is that at least one group differs significantly from the overall mean of the dependent variable.

If you only want to compare two groups, use a t test instead.

How does an ANOVA test work?

ANOVA determines whether the groups created by the levels of the independent variable are statistically different by calculating whether the means of the treatment levels are different from the overall mean of the dependent variable.

If any of the group means is significantly different from the overall mean, then the null hypothesis is rejected.

ANOVA uses the F test for statistical significance. This allows for comparison of multiple means at once, because the error is calculated for the whole set of comparisons rather than for each individual two-way comparison (which would happen with a t test).

The F test compares the variance in each group mean from the overall group variance. If the variance within groups is smaller than the variance between groups, the F test will find a higher F value, and therefore a higher likelihood that the difference observed is real and not due to chance.

Assumptions of ANOVA

The assumptions of the ANOVA test are the same as the general assumptions for any parametric test:

- Independence of observations: the data were collected using statistically valid sampling methods, and there are no hidden relationships among observations. If your data fail to meet this assumption because you have a confounding variable that you need to control for statistically, use an ANOVA with blocking variables.

- Normally-distributed response variable: The values of the dependent variable follow a normal distribution.

- Homogeneity of variance: The variation within each group being compared is similar for every group. If the variances are different among the groups, then ANOVA probably isn’t the right fit for the data.

Performing a one-way ANOVA

While you can perform an ANOVA by hand, it is difficult to do so with more than a few observations. We will perform our analysis in the R statistical program because it is free, powerful, and widely available. For a full walkthrough of this ANOVA example, see our guide to performing ANOVA in R.

The sample dataset from our imaginary crop yield experiment contains data about:

- fertilizer type (type 1, 2, or 3)

- planting density (1 = low density, 2 = high density)

- planting location in the field (blocks 1, 2, 3, or 4)

- final crop yield (in bushels per acre).

This gives us enough information to run various different ANOVA tests and see which model is the best fit for the data.

For the one-way ANOVA, we will only analyze the effect of fertilizer type on crop yield.

After loading the dataset into our R environment, we can use the command aov() to run an ANOVA. In this example we will model the differences in the mean of the response variable, crop yield, as a function of type of fertilizer.

one.way <- aov(yield ~ fertilizer, data = crop.data)Interpreting the results

To view the summary of a statistical model in R, use the summary() function.

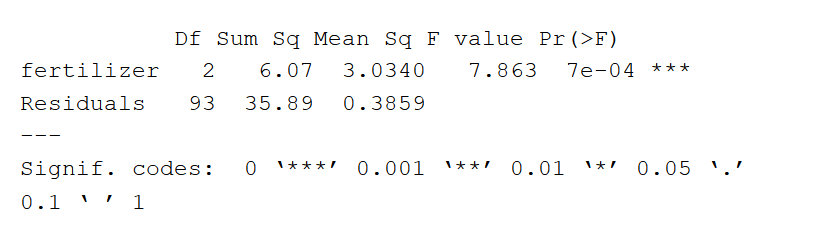

summary(one.way)The summary of an ANOVA test (in R) looks like this:

The ANOVA output provides an estimate of how much variation in the dependent variable that can be explained by the independent variable.

- The first column lists the independent variable along with the model residuals (aka the model error).

- The Df column displays the degrees of freedom for the independent variable (calculated by taking the number of levels within the variable and subtracting 1), and the degrees of freedom for the residuals (calculated by taking the total number of observations minus 1, then subtracting the number of levels in each of the independent variables).

- The Sum Sq column displays the sum of squares (a.k.a. the total variation) between the group means and the overall mean explained by that variable. The sum of squares for the fertilizer variable is 6.07, while the sum of squares of the residuals is 35.89.

- The Mean Sq column is the mean of the sum of squares, which is calculated by dividing the sum of squares by the degrees of freedom.

- The F value column is the test statistic from the F test: the mean square of each independent variable divided by the mean square of the residuals. The larger the F value, the more likely it is that the variation associated with the independent variable is real and not due to chance.

- The Pr(>F) column is the p value of the F statistic. This shows how likely it is that the F value calculated from the test would have occurred if the null hypothesis of no difference among group means were true.

Because the p value of the independent variable, fertilizer, is statistically significant (p < 0.05), it is likely that fertilizer type does have a significant effect on average crop yield.

Post-hoc testing

ANOVA will tell you if there are differences among the levels of the independent variable, but not which differences are significant. To find how the treatment levels differ from one another, perform a TukeyHSD (Tukey’s Honestly-Significant Difference) post-hoc test.

TukeyHSD(one.way)The Tukey test runs pairwise comparisons among each of the groups, and uses a conservative error estimate to find the groups which are statistically different from one another.

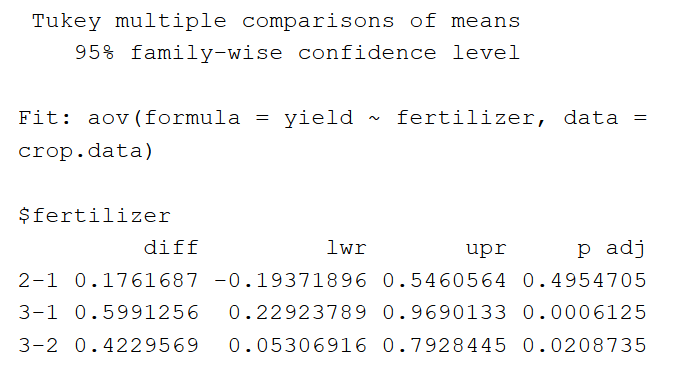

The output of the TukeyHSD looks like this:

First, the table reports the model being tested (‘Fit’). Next it lists the pairwise differences among groups for the independent variable.

Under the ‘$fertilizer’ section, we see the mean difference between each fertilizer treatment (‘diff’), the lower and upper bounds of the 95% confidence interval (‘lwr’ and ‘upr’), and the p value, adjusted for multiple pairwise comparisons.

The pairwise comparisons show that fertilizer type 3 has a significantly higher mean yield than both fertilizer 2 and fertilizer 1, but the difference between the mean yields of fertilizers 2 and 1 is not statistically significant.

Reporting the results of ANOVA

When reporting the results of an ANOVA, include a brief description of the variables you tested, the F value, degrees of freedom, and p values for each independent variable, and explain what the results mean.

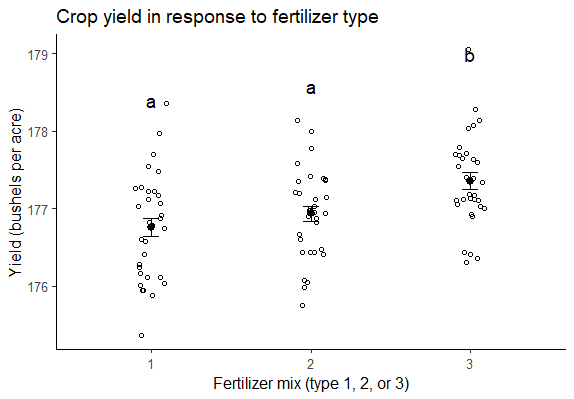

If you want to provide more detailed information about the differences found in your test, you can also include a graph of the ANOVA results, with grouping letters above each level of the independent variable to show which groups are statistically different from one another:

Other interesting articles

If you want to know more about statistics, methodology, or research bias, make sure to check out some of our other articles with explanations and examples.

Statistics

Methodology

Frequently asked questions about one-way ANOVA

- What is the difference between a one-way and a two-way ANOVA?

-

The only difference between one-way and two-way ANOVA is the number of independent variables. A one-way ANOVA has one independent variable, while a two-way ANOVA has two.

- One-way ANOVA: Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka) and race finish times in a marathon.

- Two-way ANOVA: Testing the relationship between shoe brand (Nike, Adidas, Saucony, Hoka), runner age group (junior, senior, master’s), and race finishing times in a marathon.

All ANOVAs are designed to test for differences among three or more groups. If you are only testing for a difference between two groups, use a t-test instead.

- What is a factorial ANOVA?

-

A factorial ANOVA is any ANOVA that uses more than one categorical independent variable. A two-way ANOVA is a type of factorial ANOVA.

Some examples of factorial ANOVAs include:

- Testing the combined effects of vaccination (vaccinated or not vaccinated) and health status (healthy or pre-existing condition) on the rate of flu infection in a population.

- Testing the effects of marital status (married, single, divorced, widowed), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

- Testing the effects of feed type (type A, B, or C) and barn crowding (not crowded, somewhat crowded, very crowded) on the final weight of chickens in a commercial farming operation.

- How is statistical significance calculated in an ANOVA?

-

In ANOVA, the null hypothesis is that there is no difference among group means. If any group differs significantly from the overall group mean, then the ANOVA will report a statistically significant result.

Significant differences among group means are calculated using the F statistic, which is the ratio of the mean sum of squares (the variance explained by the independent variable) to the mean square error (the variance left over).

If the F statistic is higher than the critical value (the value of F that corresponds with your alpha value, usually 0.05), then the difference among groups is deemed statistically significant.

- What is the difference between quantitative and categorical variables?

-

Quantitative variables are any variables where the data represent amounts (e.g. height, weight, or age).

Categorical variables are any variables where the data represent groups. This includes rankings (e.g. finishing places in a race), classifications (e.g. brands of cereal), and binary outcomes (e.g. coin flips).

You need to know what type of variables you are working with to choose the right statistical test for your data and interpret your results.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). One-way ANOVA | When and How to Use It (With Examples). Scribbr. Retrieved November 3, 2023, from https://www.scribbr.com/statistics/one-way-anova/